Build an Android chat application with ONNX Runtime API

Introduction

Create a development environment

Build ONNX Runtime

Build ONNX Runtime Generate() API

Run a benchmark on an Android phone

Build and run an Android chat app

Next Steps

Build an Android chat application with ONNX Runtime API

Build an Android chat app

Another way to run the model is to use an Android GUI app. You can use the Android demo application included in the onnxruntime-inference-examples repository to demonstrate local inference.

Clone the repo

git clone https://github.com/microsoft/onnxruntime-inference-examples

cd onnxruntime-inference-examples

git checkout 7a635daae48450ff142e5c0848a564b245f04112

You could probably use a later commit but these steps have been tested with the commit 7a635daae48450ff142e5c0848a564b245f04112.

Build the app using Android Studio

Open the mobile\examples\phi-3\android directory with Android Studio.

(Optional) In case you want to use the ONNX Runtime AAR you built

Copy ONNX Runtime AAR and ONNX Runtime GenAI AAR you built earlier in this learning path:

Copy onnxruntime\build\Windows\Release\java\build\android\outputs\aar\onnxruntime-release.aar onnxruntime-inference-examples\mobile\examples\phi-3\android\app\libs\

Copy onnxruntime-genai\build\Android\Release\src\java\build\android\outputs\aar\onnxruntime-genai-release.aar onnxruntime-inference-examples\mobile\examples\phi-3\android\app\libs\

Update build.gradle.kts (:app) as below:

// ONNX Runtime with GenAI

//implementation("com.microsoft.onnxruntime:onnxruntime-android:latest.release")

implementation(files("libs/onnxruntime-release.aar"))

//implementation(files("libs/onnxruntime-genai-android-0.8.1.aar"))

implementation(files("libs/onnxruntime-genai-release.aar"))

Finally, click File > Sync Project with Gradle

Build and run the app

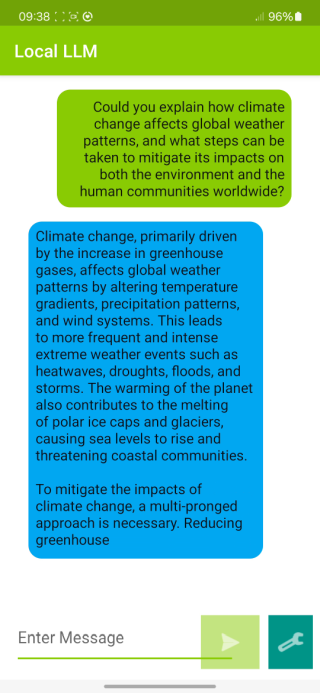

When you select Run, the build will be executed, and then the app will be copied and installed on the Android device. This app will automatically download the Phi-3-mini model during the first run. After the download, you can input the prompt in the text box and execute it to run the model.

You should now see a running app on your phone, which looks like this: