Run AI models with Docker Model Runner

Introduction

Run AI models using Docker Model Runner

Run a containerized AI chat app with Docker Compose

Next Steps

Run AI models with Docker Model Runner

Simplified Local LLM Inference

Docker Model Runner is an official Docker extension that allows you to run Large Language Models (LLMs) directly on your local computer. It provides a convenient way to deploy and use AI models across different environments, including Arm-based systems, without complex framework setup or cloud dependencies.

Docker uses llama.cpp , an open source C/C++ project developed by Georgi Gerganov that enables efficient LLM inference on a variety of hardware, but you do not need to download, build, or install any LLM frameworks.

Docker Model Runner provides a easy-to-use CLI interface that is familiar to Docker users.

Before you begin

Verify Docker is running with:

docker version

You should see your Docker version shown in the output.

Confirm that Docker Desktop is version 4.40 or above, for example:

Server: Docker Desktop 4.41.2 (191736)

Make sure the Docker Model Runner is enabled:

docker model --help

You should see this output:

Usage: docker model COMMAND

Docker Model Runner

Commands:

inspect Display detailed information on one model

list List the available models that can be run with the Docker Model Runner

logs Fetch the Docker Model Runner logs

pull Download a model

push Upload a model

rm Remove models downloaded from Docker Hub

run Run a model with the Docker Model Runner

status Check if the Docker Model Runner is running

tag Tag a model

version Show the Docker Model Runner version

If Docker Model Runner is not enabled, enable it by following the Docker Model Runner documentation .

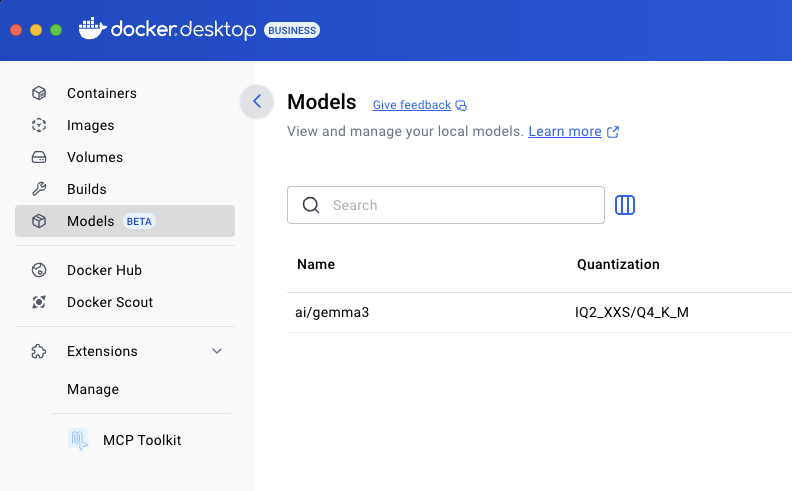

You should also see the Models tab and icon appear in your Docker Desktop sidebar.

Docker Models UI

Docker Models UI

Run your first AI model with Docker Model Runner

Docker Model Runner is an extension for Docker Desktop that simplifies running AI models locally.

Docker Model Runner automatically selects compatible model versions and optimizes performance for the Arm architecture.

You can try Model Runner by downloading and running a model from Docker Hub.

The example below uses the SmolLM2 model , a compact LLM with ~360 million parameters, designed for efficient on-device inference while performing a wide range of language tasks. You can explore further models in Docker Hub .

- Download the model

docker model pull ai/smollm2

- Run the model interactively

For a simple chat interface, run the model:

docker model run ai/smollm2

Enter a prompt at the CLI:

write a simple hello world program in C++

You see the output from the SmolLM2 model:

#include <iostream>

int main() {

std::cout << "Hello, World!" << std::endl;

return 0;

}

To exit the chat, use the /bye command.

- View downloaded models

You can print the list of models on your computer using:

docker model list

Your list will be different based on the models you have downloaded.

MODEL NAME PARAMETERS QUANTIZATION ARCHITECTURE MODEL ID CREATED SIZE

ai/gemma3 3.88 B IQ2_XXS/Q4_K_M gemma3 0b329b335467 2 months ago 2.31 GiB

ai/phi4 14.66 B IQ2_XXS/Q4_K_M phi3 03c0bc8e0f5a 2 months ago 8.43 GiB

ai/smollm2 361.82 M IQ2_XXS/Q4_K_M llama 354bf30d0aa3 2 months ago 256.35 MiB

ai/llama3.2 3.21 B IQ2_XXS/Q4_K_M llama 436bb282b419 2 months ago 1.87 GiB

Use the OpenAI endpoint to call the model

Docker Model Runner exposes a REST endpoint compatible with OpenAI’s API spec.

From your host computer, you can access the model using the OpenAI endpoint and a TCP port.

First, enable the TCP port to connect with the model:

docker desktop enable model-runner --tcp 12434

Next, use a text editor to save the code below in a file named curl-test.sh:

#!/bin/sh

curl http://localhost:12434/engines/llama.cpp/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "ai/smollm2",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Please write a hello world program in Java."

}

]

}'

Run the shell script:

bash ./curl-test.sh | jq

If you don’t have jq installed, you can eliminate piping the output.

The output, including the performance information, is shown below:

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"role": "assistant",

"content": "Here's a simple \"Hello World\" program in Java:\n\n```java\npublic class HelloWorld {\n public static void main(String[] args) {\n System.out.println(\"Hello, World!\");\n }\n}\n```\n\nThis program declares a `HelloWorld` class, defines a `main` method that contains the program's execution, and then uses `System.out.println` to print \"Hello, World!\" to the console."

}

}

],

"created": 1748622685,

"model": "ai/smollm2",

"system_fingerprint": "b1-a0f7016",

"object": "chat.completion",

"usage": {

"completion_tokens": 101,

"prompt_tokens": 28,

"total_tokens": 129

},

"id": "chatcmpl-uZGBuFoS2ERodT4KilStxDwhySLQBTN9",

"timings": {

"prompt_n": 28,

"prompt_ms": 32.349,

"prompt_per_token_ms": 1.1553214285714284,

"prompt_per_second": 865.5599863983431,

"predicted_n": 101,

"predicted_ms": 469.524,

"predicted_per_token_ms": 4.648752475247525,

"predicted_per_second": 215.11147459980745

}

}

You now have a fully functioning OpenAI-compatible inference endpoint running locally.

What you’ve learned

In this section, you learned:

- How to verify and use Docker Model Runner on Docker Desktop

- How to run a model interactively from the CLI

- How to connect to a model using a local OpenAI-compatible API

In the next section, you’ll use Docker Compose to deploy a web-based AI chat interface powered by Docker Model Runner.