Deploy an MCP Server on Raspberry Pi 5 for AI Agent Interaction using OpenAI SDK

Introduction

Introduction to Model Context Protocol (MCP) and Python uv package for local AI agents

Set up an MCP server on Raspberry Pi 5

Build and run an AI agent on your development machine

Next Steps

Deploy an MCP Server on Raspberry Pi 5 for AI Agent Interaction using OpenAI SDK

Model Context Protocol (MCP)

The Model Context Protocol (MCP) is an open specification designed to connect Large Language Model (LLM) agents to the context they need — including local sensors, databases, and SaaS APIs. It enables on-device AI agents to interact with real-world data through a plug-and-play protocol that works with any LLM framework, including the OpenAI Agent SDK.

Why use MCP?

Plug-and-play integrations: a growing catalog of pre-built MCP servers (such as filesystem, shell, vector stores, and web-scraping) gives your agent instant superpowers - no custom integration or glue code required.

Model/vendor agnostic: as the protocol lives outside the model, you can swap models like GPT-4, Claude, or your own fine-tuned model without touching the integration layer.

Security by design: MCP encourages running servers inside your own infrastructure, so sensitive data stays within your infrastructure unless explicitly shared.

Cross-ecosystem momentum: recent roll-outs from an official C# SDK to Wix’s production MCP server and Microsoft’s Azure support show the MCP spec is gathering real-world traction.

What is uv?

uv is a fast, Rust-built Python package manager that simplifies dependency management. It’s designed for speed and reliability, making it ideal for setting up local AI agent environments on constrained or embedded devices like the Raspberry Pi 5.

Some key features:

- Built in Rust for performance.

- Resolves dependencies and installs packages in one step.

- Optimized for local LLM workloads, embedded AI systems, and containerized Python environments.

For further information on uv, see:

https://github.com/astral-sh/uv

.

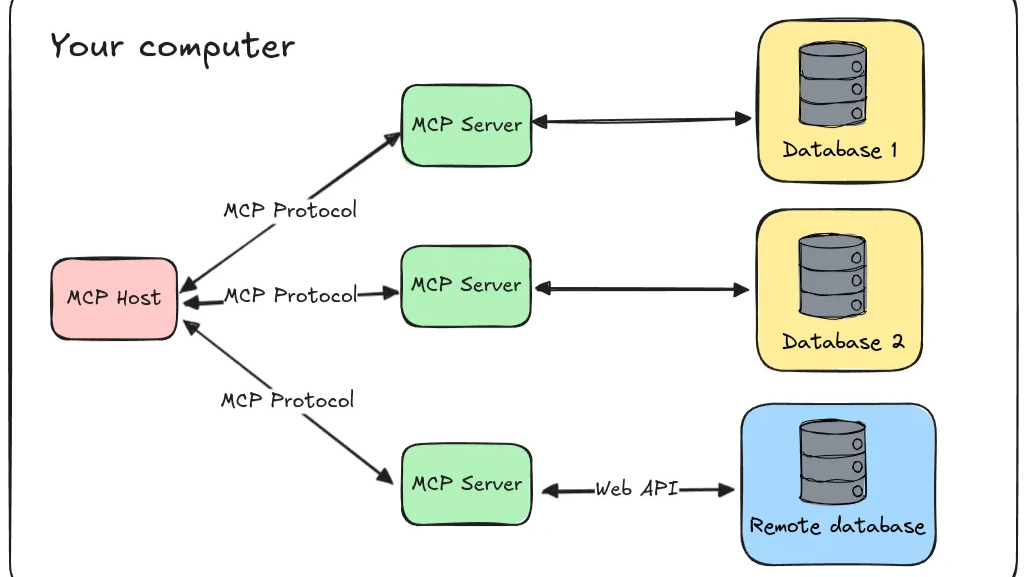

A high-level view of the architecture

Figure: High-level view of the architecture of the Model Context Protocol (MCP) for local AI agent integration with real-world data sources.

Each component in the diagram plays a distinct role in enabling AI agents to interact with real-world context:

- The MCP Host is the LLM-powered application (such as Claude Desktop, an IDE plugin, or an application built with the OpenAI Agents SDK).

- The MCP Client is the runtime shim that keeps a 1-to-1 connection with each server.

- The MCP Server is a lightweight process that advertises tools (functions) over MCP.

- The Local data sources are files, databases, or sensors your server can read directly.

- The Remote services are external APIs the server can call on the host’s behalf.

Learn more about AI Agents in the Learning Path Deploy an AI Agent on Arm with llama.cpp and llama-cpp-agent using KleidiAI .

Section summary

This page introduces MCP and uv as foundational tools for building fast, secure, and modular AI agents that run efficiently on edge devices like the Raspberry Pi 5.